Part 2: Evaluation Methodologies: How to Effectively Test Digital Banking Platforms

Our Journey in This Part:

Building on the strategic foundation established in Part 1, we now turn to the practical approaches for evaluating potential DBP solutions. With your selection team assembled and requirements defined, it’s time to develop a structured methodology for testing and comparing platforms.

The Evaluation Spectrum: Demos, Sandboxes, and POCs

Vendor Demonstrations provide an initial glimpse into platform capabilities but are often highly scripted to showcase optimal functionality. While valuable for preliminary assessment, demos rarely reveal how a platform will perform under your specific requirements. They’re useful for understanding the platform’s interface and features at a high level but you need to go deeper to really understand if it will work for your credit union.

Best Practices for Demos:

- Prepare specific scenarios you want to see demonstrated

- Request demonstrations of edge cases, not just ideal workflows

- Involve diverse stakeholders to gather different perspectives

- Document observations systematically using a consistent evaluation form

Sandbox Environments offer a controlled testing ground where your team can explore platform features without affecting production systems. These environments allow hands-on experience with the user interface, workflows, and basic functionality, though typically with sample data rather than your member information. Sandboxes help identify potential challenges in integration and usability before committing to a particular solution.

Best Practices for Sandbox Testing:

- Develop specific test scripts based on your most common use cases

- Assign different stakeholders to test specific functions relevant to their roles

- Test both standard and edge cases to understand limitations

- Document findings systematically, including screenshots and process flows

Proof of Concept (POC) implementations represent the most comprehensive pre-purchase evaluation method. A well-structured POC involves implementing the platform with a subset of your data and testing specific use cases relevant to your credit union’s operations. This approach provides the most realistic preview of how the platform will perform in your environment and validates the platform’s ability to meet your specific business requirements. While POCs typically require a more significant investment of time (usually 4-8 weeks) and resources (potentially $25,000-$75,000 depending on scope), they provide the most valuable insights and can significantly reduce implementation risks. Many vendors offer POCs at reduced or no cost, especially for larger potential contracts, though this often comes with expectations of commitment if success criteria are met.

Best Practices for POCs:

- Define clear success criteria before beginning

- Select a representative subset of data for testing

- Test integration with your actual core banking system

- Involve end-users in testing to gather usability feedback

- Document all findings, issues, and workarounds

The progression from demos to sandbox testing to POCs represents increasing levels of investment—both from the credit union and the vendor—but also delivers increasingly valuable insights. While not every credit union can justify a full POC for multiple vendors, at a minimum, you should insist on sandbox access for your shortlisted options.

Key Evaluation Metrics for Comprehensive DBP Assessment

Effective platform evaluation requires clear, measurable criteria. Consider these essential metrics when testing potential DBP solutions:

User Experience (UX) – Evaluate the intuitive design, ease of navigation, and accessibility features. Modern platforms should offer enhanced member experiences through contemporary UX design patterns, personalization capabilities, and omnichannel delivery.

Performance Metrics – Measure response times for common transactions under various load conditions. Pay particular attention to authentication processes, account overview loading, transaction history retrieval, and funds transfer operations. New platforms may have different performance characteristics requiring infrastructure adjustments.

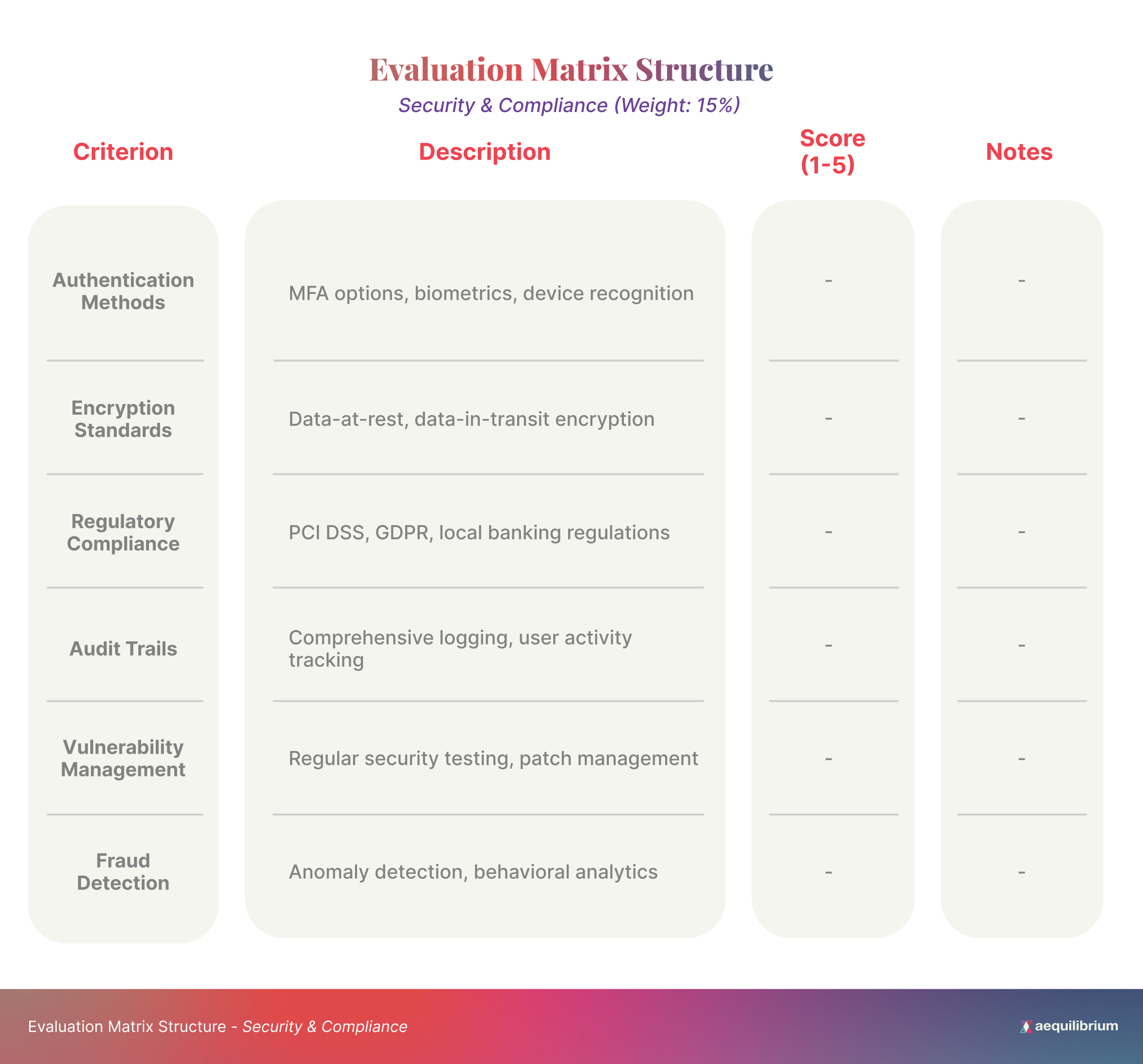

Security Robustness – Test authentication mechanisms, encryption standards, and compliance with relevant regulations like PCI DSS. Ensure the platform offers strong security protocols, including encryption, access controls, and multi-factor authentication.

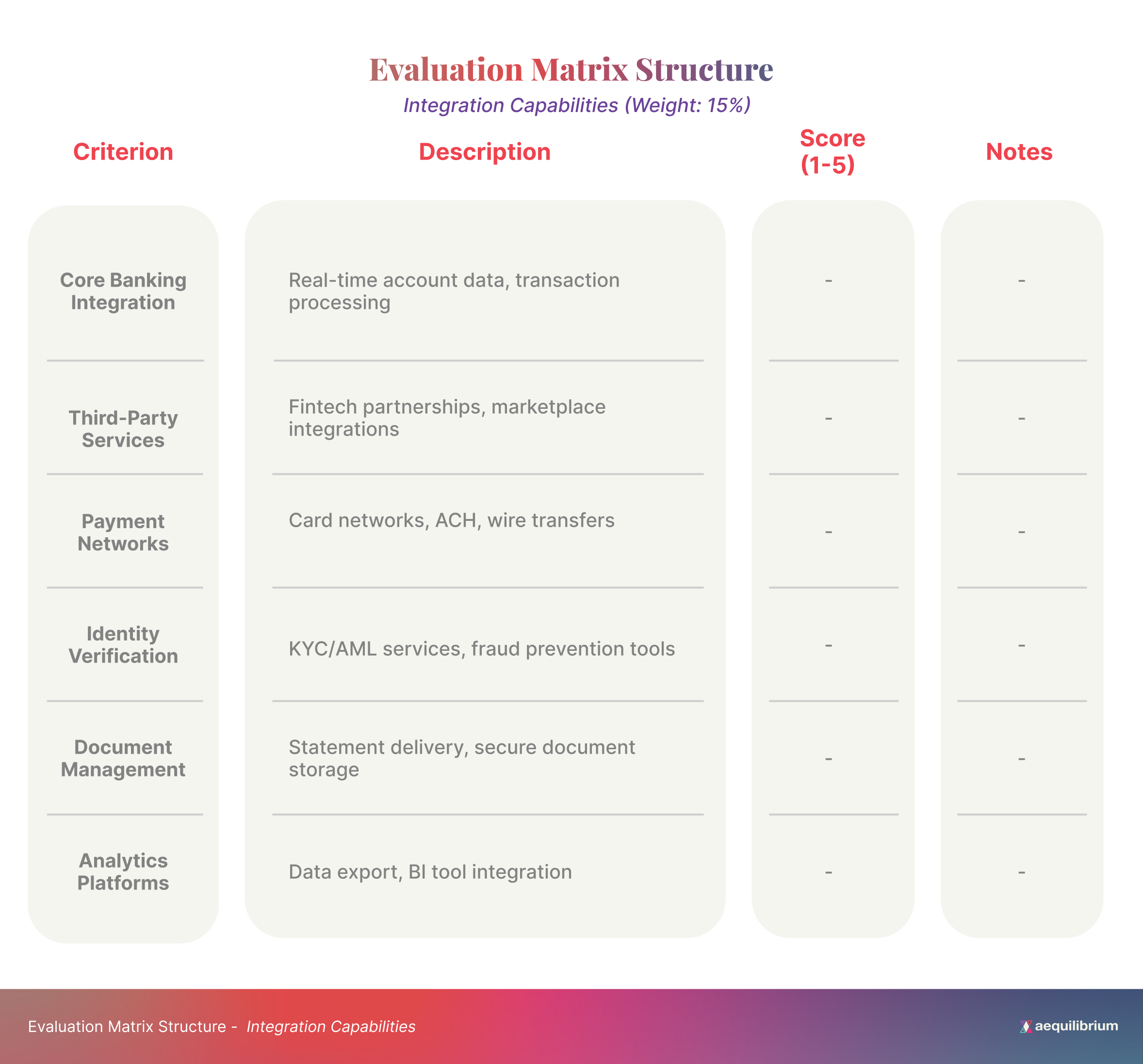

Integration Capabilities – Assess the platform’s ability to connect with your existing systems through available APIs, webhooks, or other integration methods. Modern platforms should offer an API-first approach that simplifies third-party integrations.

Customization Flexibility – Evaluate how easily the platform can be tailored to your credit union’s branding, workflow requirements, and unique member needs.

Scalability Potential – Consider how the platform will accommodate your growth plans, including handling increased transaction volumes and supporting new products or services. Cloud-native architecture and microservices-based design can provide significant advantages here.

Technical Architecture – Assess whether the platform offers opportunities to shift to a cloud-native architecture, microservices-based design, and API-first approaches that can enhance business agility.

Analytics Capabilities – Evaluate the platform’s advanced reporting capabilities and ability to provide real-time insights into member behavior.

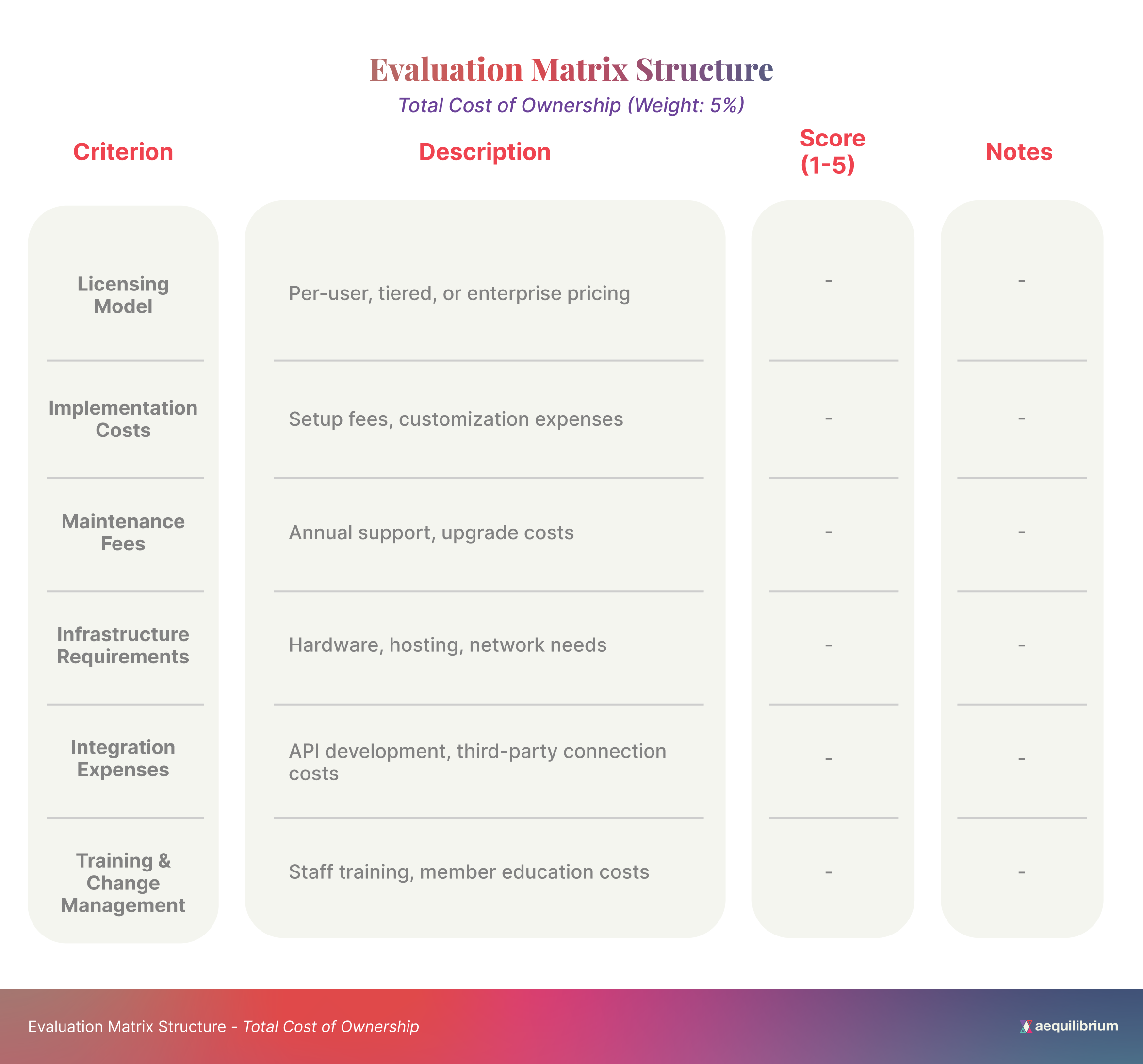

Total Cost of Ownership – Consider all costs, including licensing, implementation, and maintenance, over the platform’s expected life.

Building Your Evaluation Matrix for Structured Testing

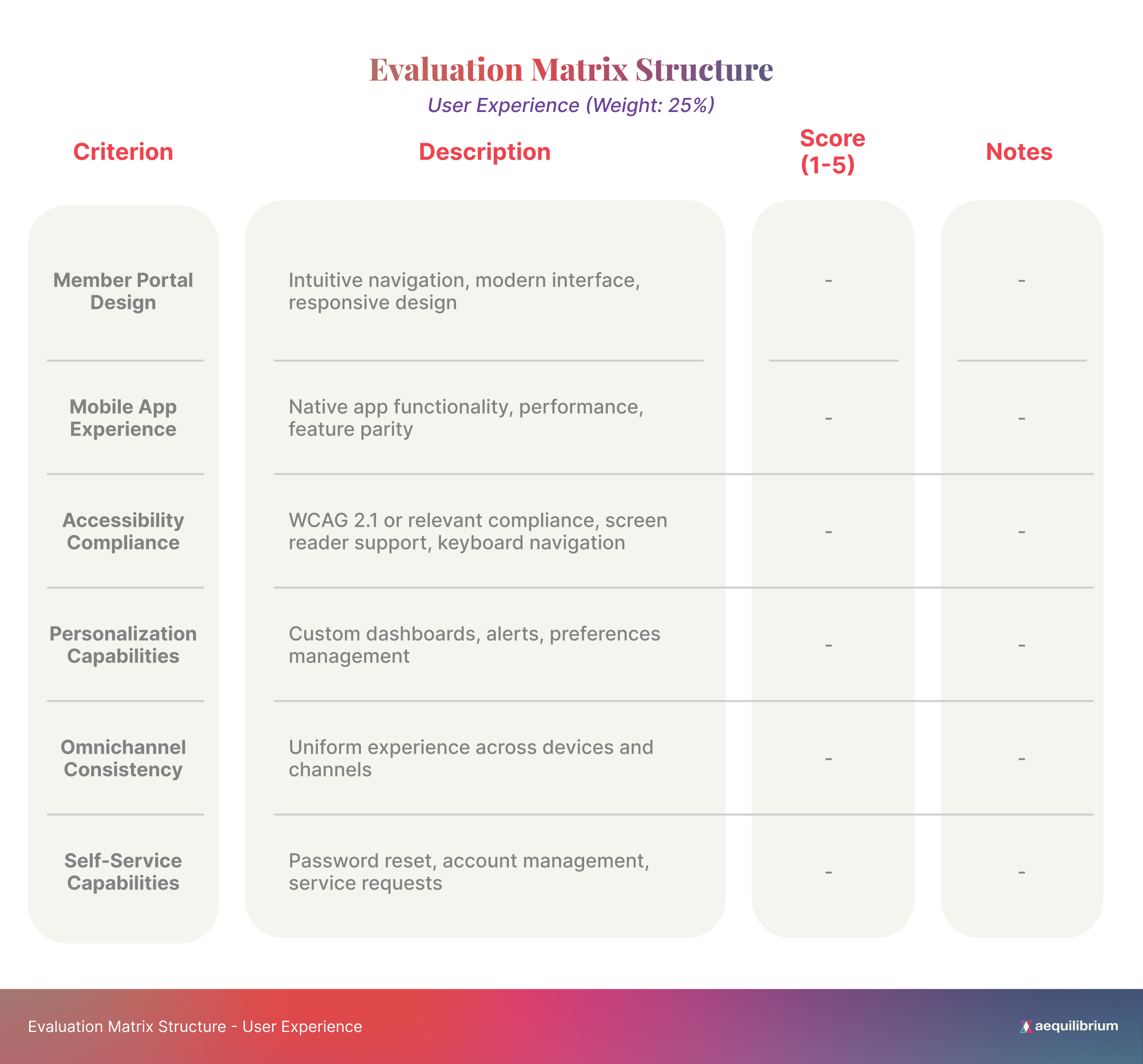

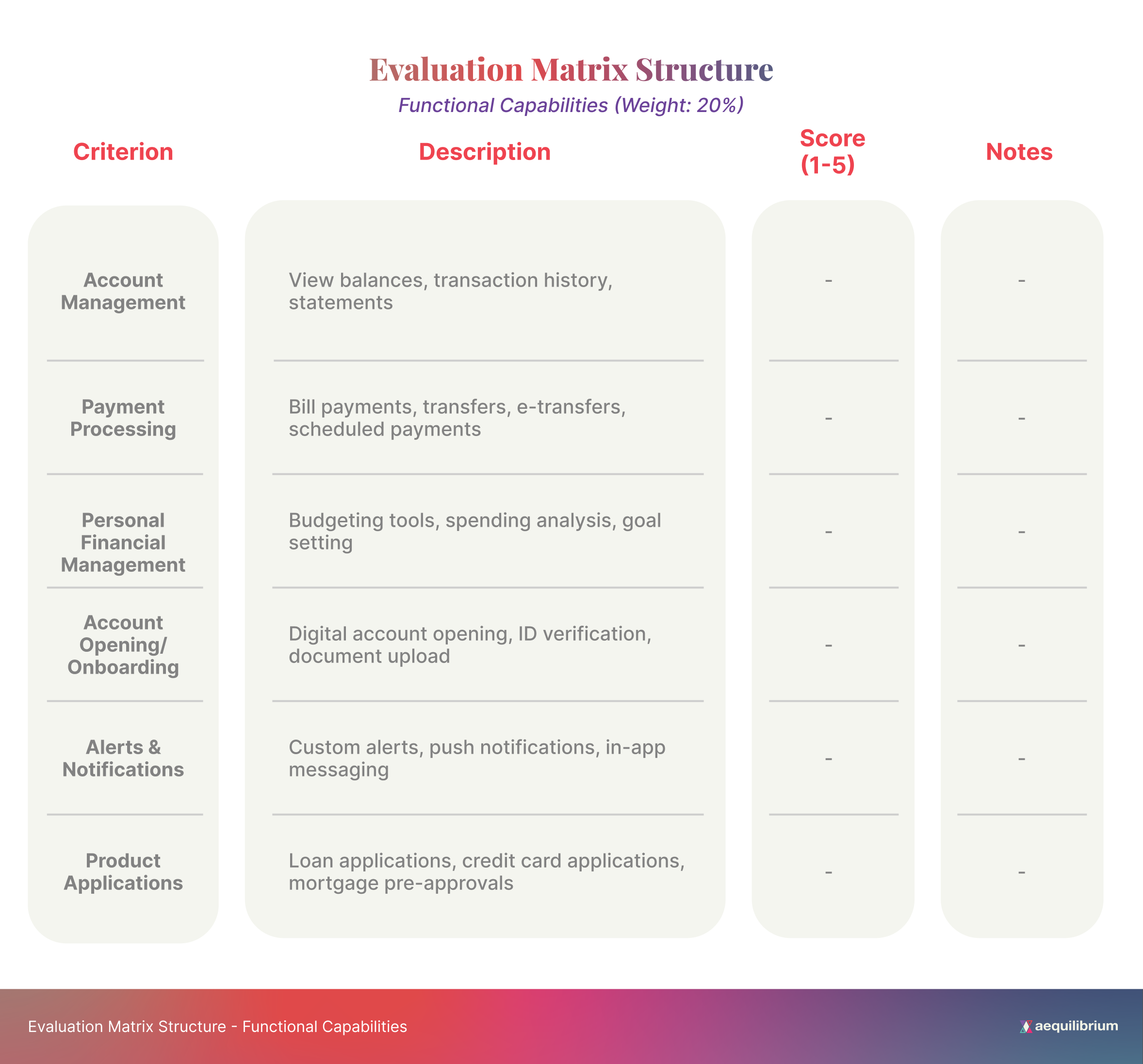

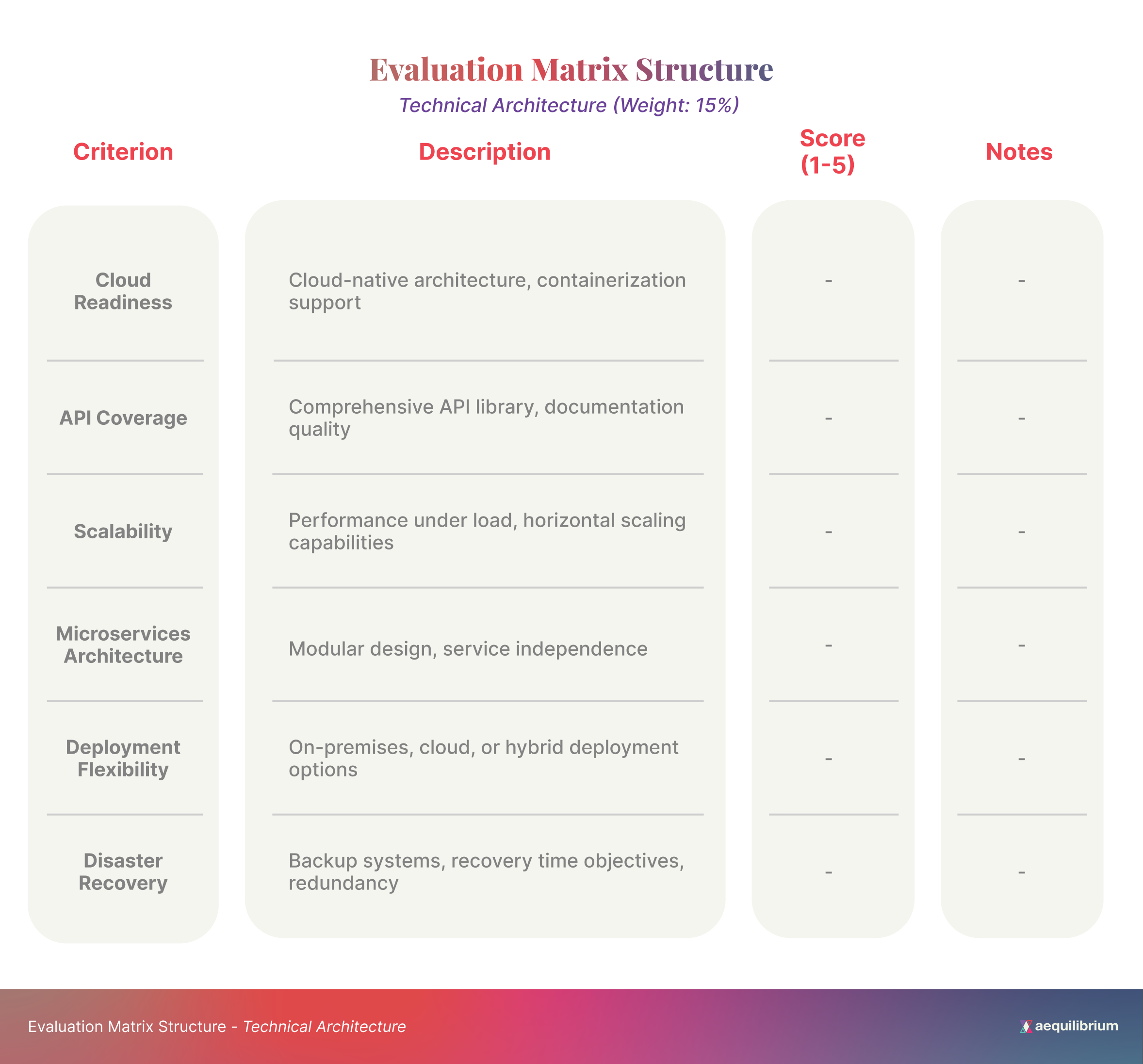

A comprehensive evaluation matrix provides structure to your testing process and ensures consistent assessment across different platforms. Your matrix should include:

Weighted Criteria Categories – Based on your credit union’s priorities, assign importance weights to different evaluation areas. For example, member experience might carry more weight than administrative features.

Specific Test Scenarios – Develop as many test cases as possible that reflect real-world scenarios, including common and edge cases. Complete member journey mapping is essential to identify all current user workflows and features and avoid functionality gaps.

Scoring Methodology – Establish a consistent rating system (e.g., a 1-5 scale) for each criterion, with clear definitions of what constitutes each score level.

Stakeholder Input Sections – Include dedicated areas for feedback from different stakeholder groups, including member-facing staff, IT personnel, compliance officers, and executive leadership.

Comparative Analysis Tools – Design the matrix to facilitate side-by-side comparison of different vendors’ solutions.

Sample Evaluation Matrix for Structured Testing Digital Banking Platforms

This matrix will help credit unions systematically assess potential DBP solutions before making a final selection.

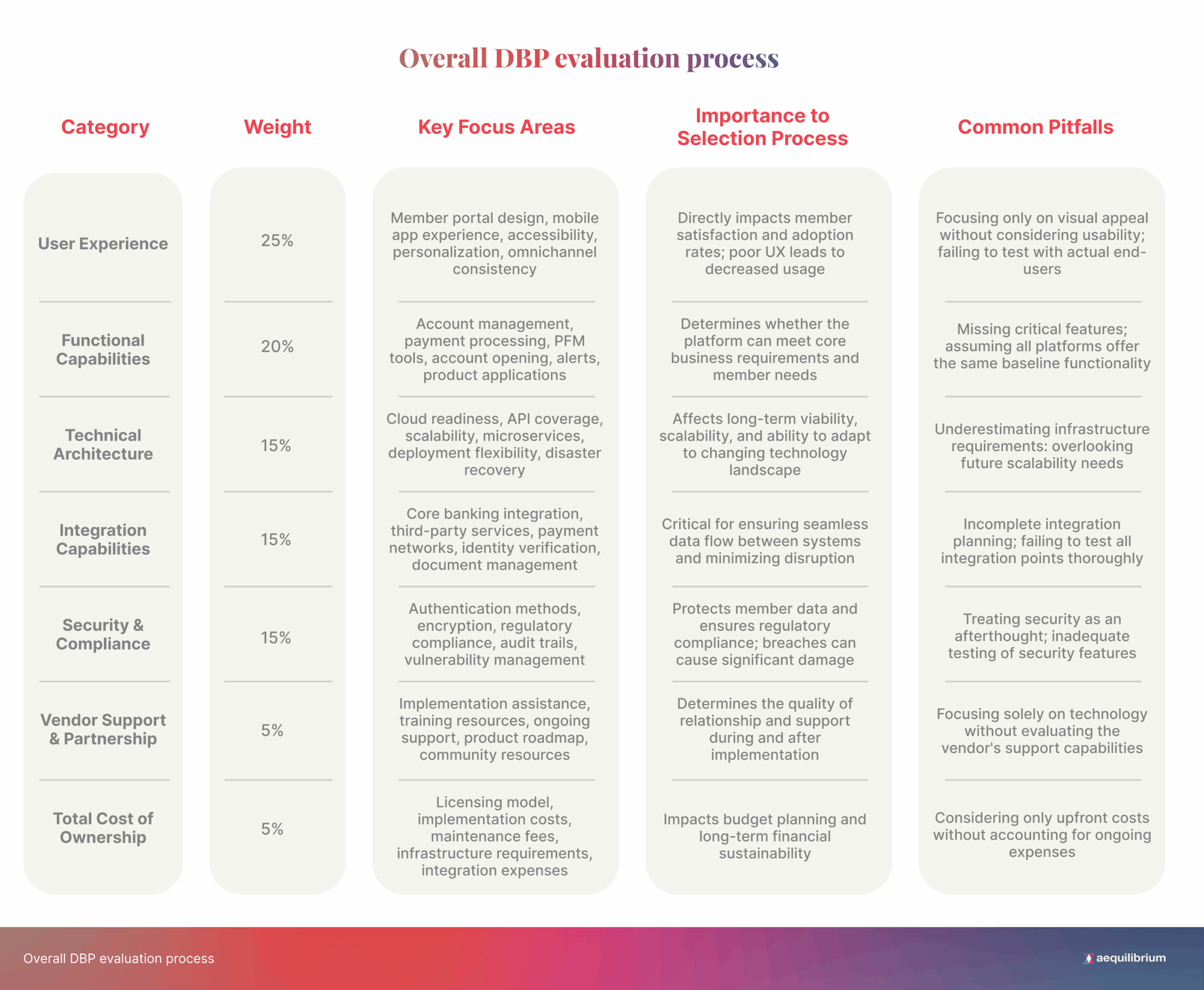

Comparative Analysis of Evaluation Matrix Categories

This evaluation matrix provides a structured framework for testing potential Digital Banking Platforms. By systematically assessing each platform against these criteria and documenting the results, credit unions can make informed decisions based on objective data rather than vendor promises or subjective impressions.

The matrix should be customized to reflect your credit union’s specific priorities and requirements. Consider adjusting the category weights or adding additional criteria that align with your strategic objectives and member needs.

The following table provides a quick reference for understanding how each category above contributes to the overall DBP evaluation process:

Test Scenario Examples

Core Banking Integration Test Scenarios

- Account Information Retrieval

- Test Case: Verify real-time account balance display

- Expected Result: Accurate balances shown within 2 seconds of log in

- Test Data: Sample accounts with known balances

- Pass/Fail Criteria: Balance matches core banking system within 0.01

- Transaction Processing

- Test Case: Execute funds transfer between accounts

- Expected Result: Transfer completes successfully and reflects in both accounts

- Test Data: Source and destination accounts with sufficient funds

- Pass/Fail Criteria: Transaction appears in history within 30 seconds

User Experience Test Scenarios

- Mobile Responsiveness

- Test Case: Access platform on multiple device types and screen sizes

- Expected Result: UI adapts appropriately to each form factor

- Test Data: iPhone, Android, tablet, desktop browsers

- Pass/Fail Criteria: All functions accessible without horizontal scrolling

- Accessibility Compliance

- Test Case: Navigate the platform using the keyboard only and screen reader

- Expected Result: All functions accessible via keyboard, proper ARIA labels

- Test Data: Standard user account

- Pass/Fail Criteria: Complete core tasks without mouse interaction

Security Test Scenarios

- Multi-Factor Authentication

- Test Case: Login with MFA enabled

- Expected Result: Secondary authentication prompt after password entry

- Test Data: User with MFA configured

- Pass/Fail Criteria: Cannot access the account without completing MFA

- Session Management

- Test Case: Verify session timeout after inactivity

- Expected Result: User logged out after the configured timeout period

- Test Data: Standard user account

- Pass/Fail Criteria: Session terminates within 30 seconds of timeout threshold

Scoring Methodology

For each criterion, use the following 1-5 scale:

- Poor – Does not meet requirements, significant deficiencies

- Below Average – Partially meets requirements with notable limitations

- Average – Meets basic requirements adequately

- Good – Exceeds requirements in some areas

- Excellent – Significantly exceeds requirements, provides exceptional value

Weighted Score Calculation

- Calculate the average score for each category

- Multiply the category average by its weight percentage

- Sum all weighted category scores for the final platform score

Stakeholder Input Collection

Include dedicated sections for feedback from:

- Member-facing staff

- IT personnel

- Compliance officers

- Executive leadership

- Member focus groups (if applicable)

Comparative Analysis

Create a summary table showing the weighted scores of all evaluated platforms side by side, highlighting each solution’s strengths and weaknesses.

Stakeholder Engagement: Ensuring Comprehensive Input

Different stakeholders will prioritize different aspects of the platform. Ensure comprehensive evaluation by:

Structured Feedback Collection – Create role-specific evaluation forms that focus on aspects relevant to each stakeholder group.

Collaborative Evaluation Sessions – Conduct group testing sessions where stakeholders can observe and discuss platform capabilities together.

Weighted Decision-Making – Acknowledge that some stakeholders’ input may carry more weight for certain criteria based on their expertise and role.

Regular Communication – Share findings across stakeholder groups to build consensus and identify potential concerns early.

Executive Summaries – Prepare concise summaries of evaluation findings for executive decision-makers, highlighting strategic implications.

Key Takeaways

- Progress from demos to sandboxes to POCs for increasingly valuable insights

- Establish clear, measurable evaluation criteria across multiple dimensions

- Create a structured evaluation matrix with weighted categories

- Engage diverse stakeholders in the evaluation process

- Document findings systematically to support informed decision-making

Next in the Series

In Part 3, we’ll dive deeper into integration testing and technical validation, exploring how to ensure your selected DBP will work seamlessly with your existing systems and meet technical requirements.

Baris Tuncertan

Head of Technology

[email protected]

Stay ahead of the transition—follow us on LinkedIn for expert insights on evaluating, testing, and implementing your next digital banking platform.

If you’re navigating a platform transition and want to explore your options, reach out to our team directly.