iOS was the natural starting point for Polar. As a primarily iOS-focused developer, we were able to leverage my iOS skills while positively providing me with the perfect chance to explore new iOS technologies and stretch my iOS app development skills.

New frontiers

With Polar, we wanted to create a truly unique experience to show off the vast capabilities of aequilibrium. Augmented reality (AR) was an obvious choice for the project for many reasons, including being fun to work with and also an exciting new frontier to test out. An emerging field within the tech landscape, learning to build AR has since increased the potential to enhance many of our existing aequilibrium projects and has also opened up completely new types of app possibilities which we look forward to creating for clients or ourselves in the near and tangible future.

While ARKit is limited to iOS only and doesn’t have every feature of larger AR frameworks like Vuforia, it was the perfect fit for Polar.

ARKit is a built-in framework for creating AR experiences in iOS. Introduced in 2017 with iOS 11, it enables developers to quickly build impressive AR apps with world tracking, image detection, and more. ARKit integrated really well into our existing iOS development pipeline and was quick to get up and running. Alternatives for AR do exist on the market, such as Google’s ARCore, Vuforia, and Unity AR; however, we chose simplicity and integration with the iOS ecosystem over additional features and complexity for this project. ARKit, for example, is already built into hundreds of millions of iOS devices around the world, making our app’s download size small and convenient for users. While ARKit is limited to iOS only and doesn’t have every feature of larger AR frameworks like Vuforia, it was the perfect fit for Polar.

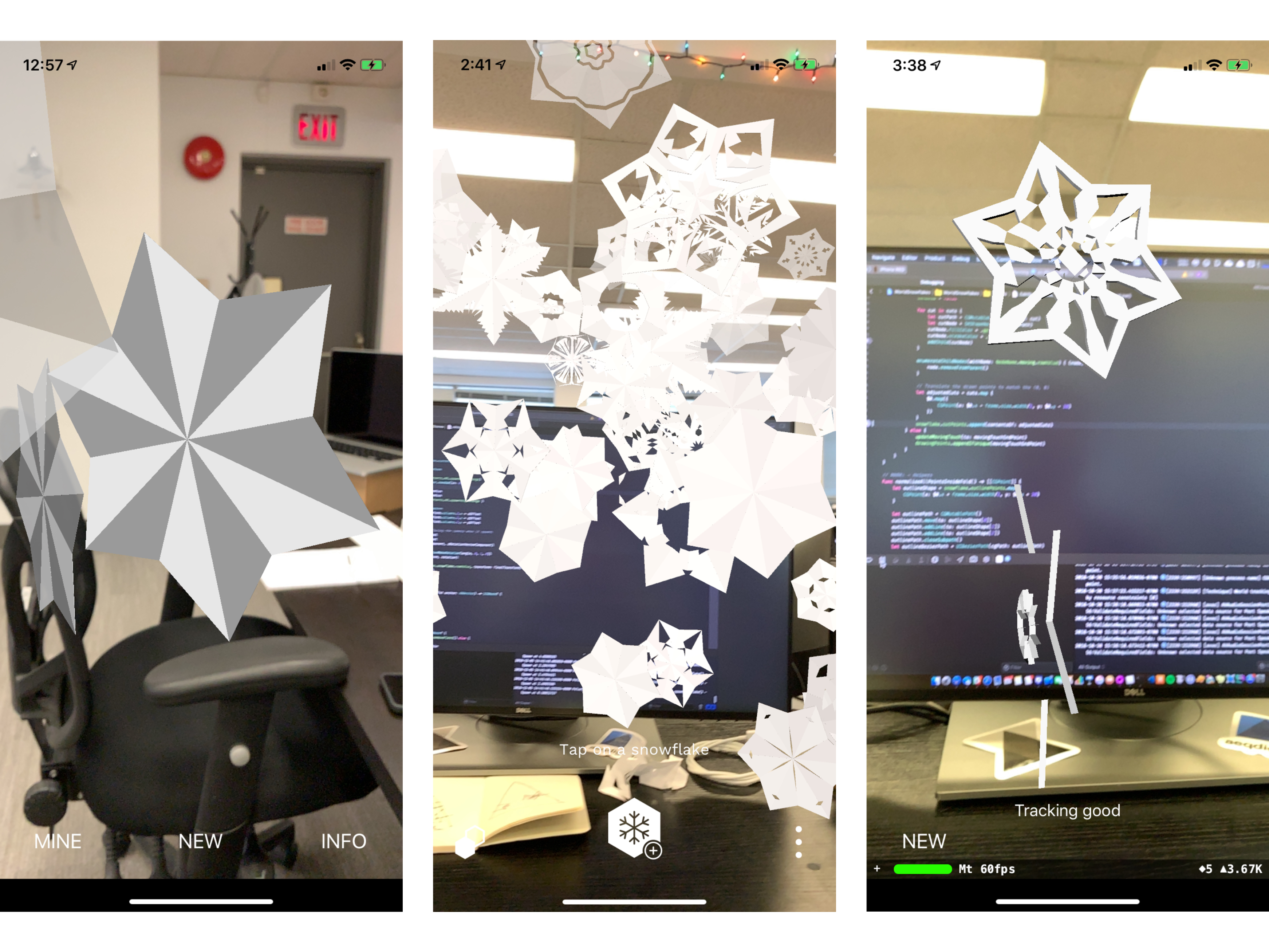

Some early work-in-progress Polar screenshots.

Proof of concept

When we started the project, we didn’t know what would be possible. The first step was to create an exploratory plan to see if any of our amazing ideas would work within our timeframe. My goal as the lead developer was to 1) create a proof of concept in less than a week and 2) to validate, using ARKit as our chosen technology, the ability to dynamically model snowflakes with user-created cuts and AR interaction in the real world.

The prototype developed to validate my proof of concept worked perfectly. Like any prototype, it is a very basic version of the final product but highlights the core functionality of the product in order to move forward. My prototype showed random floating snowflakes drawn from custom shapes. It also allowed users to tap to “throw” snowballs, stopping when it hits an object. Snowball throwing didn’t make it into the final project but demonstrated the fun breadth of capabilities using ARKit for iOS. Maybe in Version 2…

Proof of concept app shows the first snowflakes and snowballs.

Building the world

From a simple point of view, an AR app is just a 3D world with the background showing the camera view. AR apps, in general, can never really “know” what the real world is, so developers use a framework that creates a 3D version of the world using cameras to help build context. For Polar, we chose to work with SceneKit for its seamless integration with Apple’s ARKit. SceneKit is Apple’s 3D engine framework, which allowed us to create and display virtual 3D content.

Once ARKit had initialized and started tracking the world, every point in the real world could be mapped to our scene using ARAnchor. Each anchor corresponded to a position in the real world and stayed constant regardless of moving or rotating our device. In this way, we were able to link the virtual world to the real world and then add in our own virtual objects. Virtual content augmenting the real world is really the core of the AR experience.

ARKit finding and displaying feature points in the real world.

Creating the snowflakes

The biggest AR challenge I needed to overcome was then creating custom 3D shapes for the snowflakes. Most AR apps would have a 3D artist create models or 3D objects which the app would then place into the AR world; however, since every snowflake is unique in the case of Polar, we would’ve needed to dynamically create every model in the app!

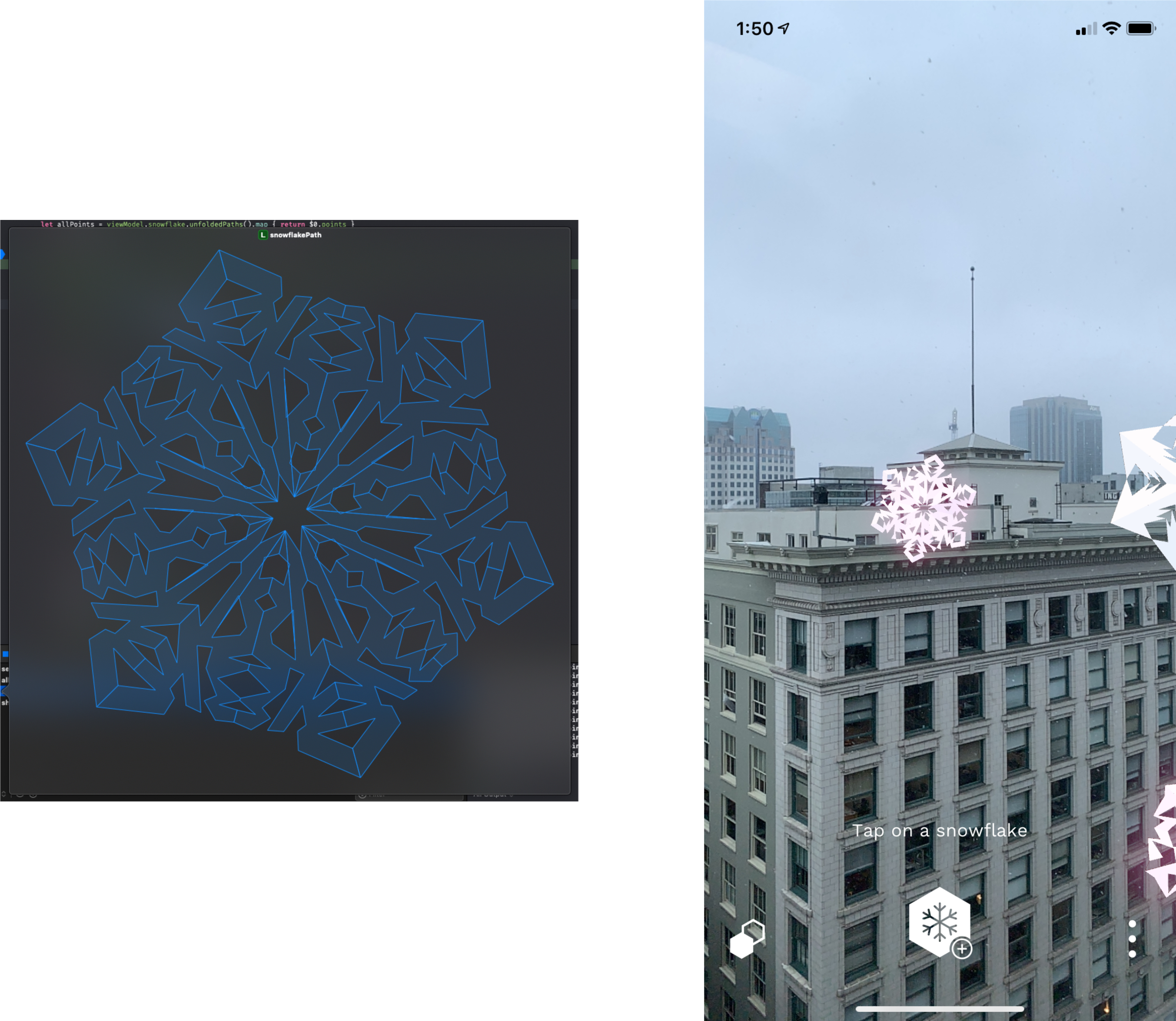

Luckily, SceneKit can create objects based on a custom drawing path. A path is simply a series of points and instructions to draw lines and make a shape. By combining this path and the desired 3D size input of our snowflakes, SceneKit was able to generate the model for us. After using SceneKit to create our snowflakes, I worked with our UX designer Emily Chong to apply lighting, colors, animations, and magic to dynamically bring our snowflakes to life.

The drawing path for a snowflake with the size and design comes to life in Polar.

Setting up ARKit

There are two main components to getting ARKit up and running. First, we needed to set up world tracking, or the ability for ARKit to understand the world. With ARWorldTrackingConfiguration, ARKit was able to start tracking both the device’s rotation (turning it, looking around), and movement (walking around). This is commonly referred to as Six Degrees of Freedom tracking since we track three axes of rotation and three axes of movement.

The big benefit is that we never have to manage our snowflakes’ position by hand– it’s all done by ARKit.

The second was anchoring our virtual content to the real world. We were able to mark positions in the real world by creating objects called ARAnchors. Every time we added an anchor, we gave it an explicit position, usually relative to the device’s current position. A snowflake, for example, may have been 1 meter in front of the device and 2 meters to the left. ARKit was able to identify when an anchor was added and then ask for our snowflake model to display at that anchor’s position. The big benefit is that we never have to manage our snowflakes’ position by hand– it’s all done by ARKit.

Bringing ideas to life

I found Apple’s documentation and guides incredibly helpful in creating the AR experience for Polar. Apple’s sample code, for example, was all I needed to start. Overall, ARKit had the right balance of ease of use and capabilities needed for Polar and taught me all about creating AR apps. What I appreciated most was how ARKit enabled me to quickly create and iterate, feeding the Agile nature in me. Rather than getting stuck in the technical details, this let me as a developer focus on truly bringing our team’s ideas to life.

Working on Polar was an exciting opportunity for me. I was lucky to work with such a dedicated team, which ended up playing a big part in the overall success of our end-product. We were able to brainstorm, prototype, and iterate as a close-knit team, in the end, building a unique and really cool AR experience.